Language-Visual Saliency with CLIP and OpenVINO™ — OpenVINO™ documentationCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to ...

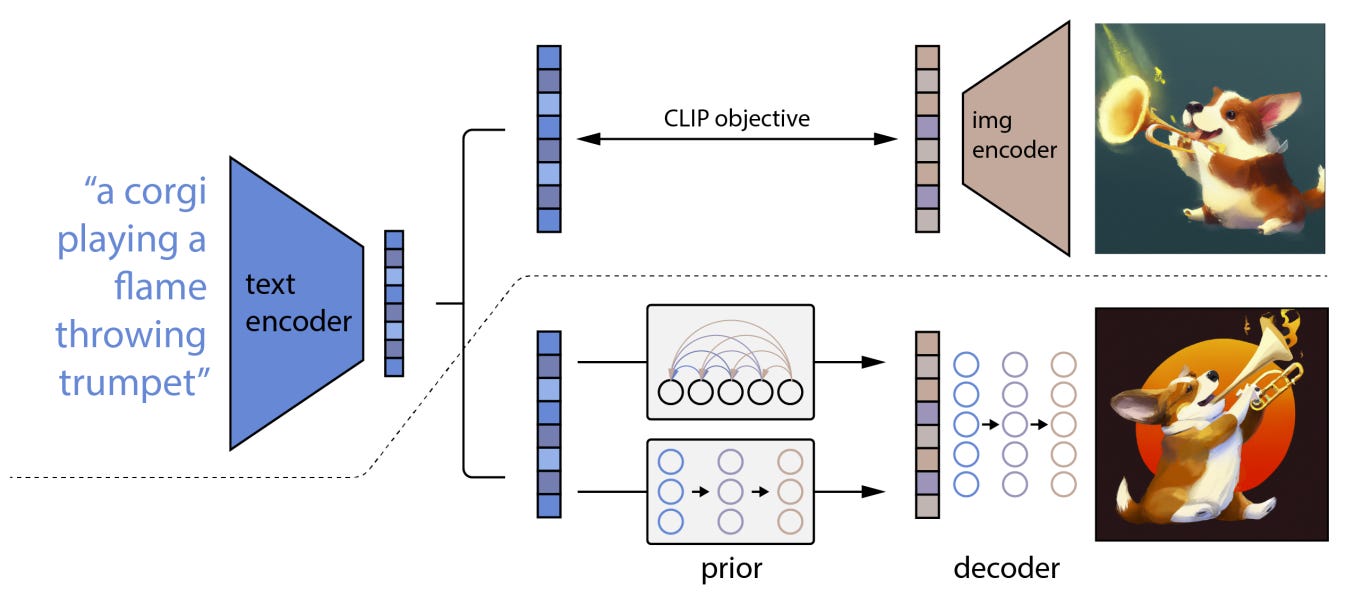

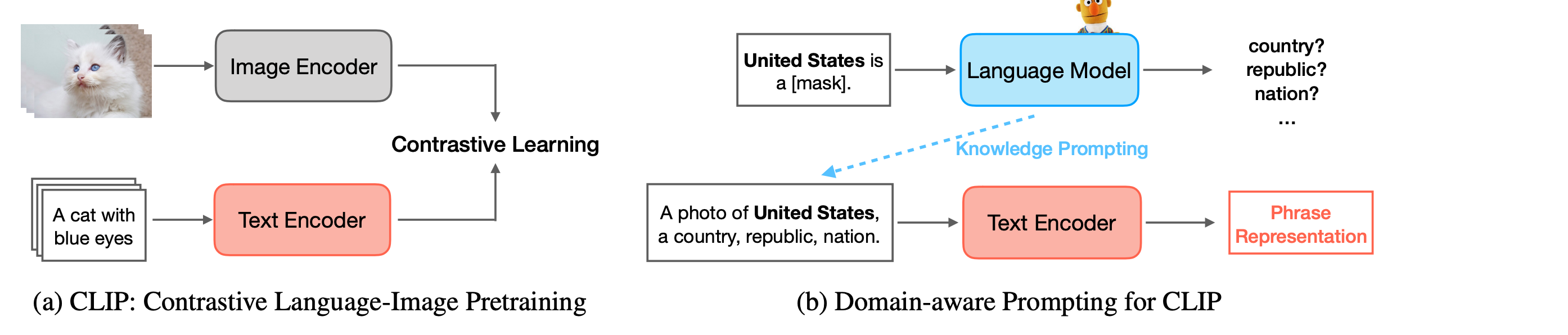

Illustration of the (a) standard vision-language model CLIP [35]. (b)... | Download Scientific Diagram

Meet CLIPDraw: Text-to-Drawing Synthesis via Language-Image Encoders Without Model Training | Synced

Researchers at Microsoft Research and TUM Have Made Robots to Change Trajectory by Voice Command Using A Deep Machine Learning Model - MarkTechPost

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

Hao Liu on Twitter: "How to pretrain large language-vision models to help seeing, acting, and following instructions? We found that using models jointly pretrained on image-text pairs and text-only corpus significantly outperforms

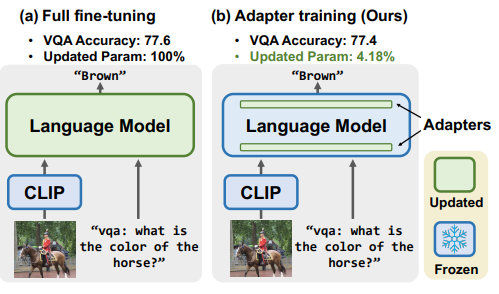

![PDF] CLIP-Adapter: Better Vision-Language Models with Feature Adapters | Semantic Scholar PDF] CLIP-Adapter: Better Vision-Language Models with Feature Adapters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/c04067f03fba2df0c14ea51a170f213eb2983708/2-Figure1-1.png)