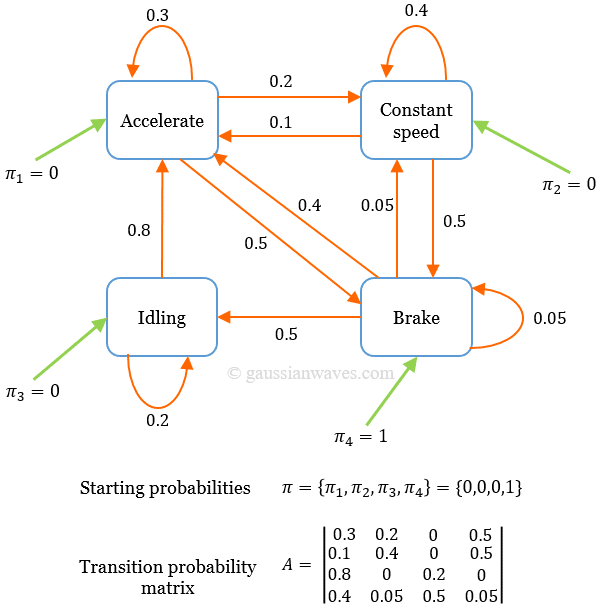

Consider a 4 state Markov chain with the transition probability matrix p = ? ? ? ? ? 0.1 0.2 0.3 0.4 0 0.5 0.2 0.3 0 0 0.3 0.7 0 0 0.1 0.9 ? ? ? ? ? a). Draw the state transition diagram, with the | Homework.Study.com

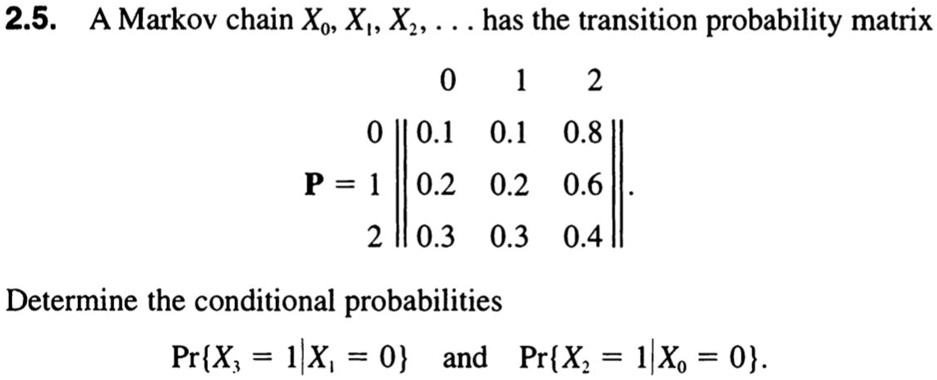

SOLVED: 2.5. A Markov chain Xo X,, Xz, has the transition probability matrix 2 0.1 0.1 0.8 P =1 0.2 0.2 0.6 2 0.3 0.3 0.4 Determine the conditional probabilities PrX; = 1X, = 0 and PrXz = lxo = 0.