Entropy | Free Full-Text | On the Structure of the World Economy: An Absorbing Markov Chain Approach

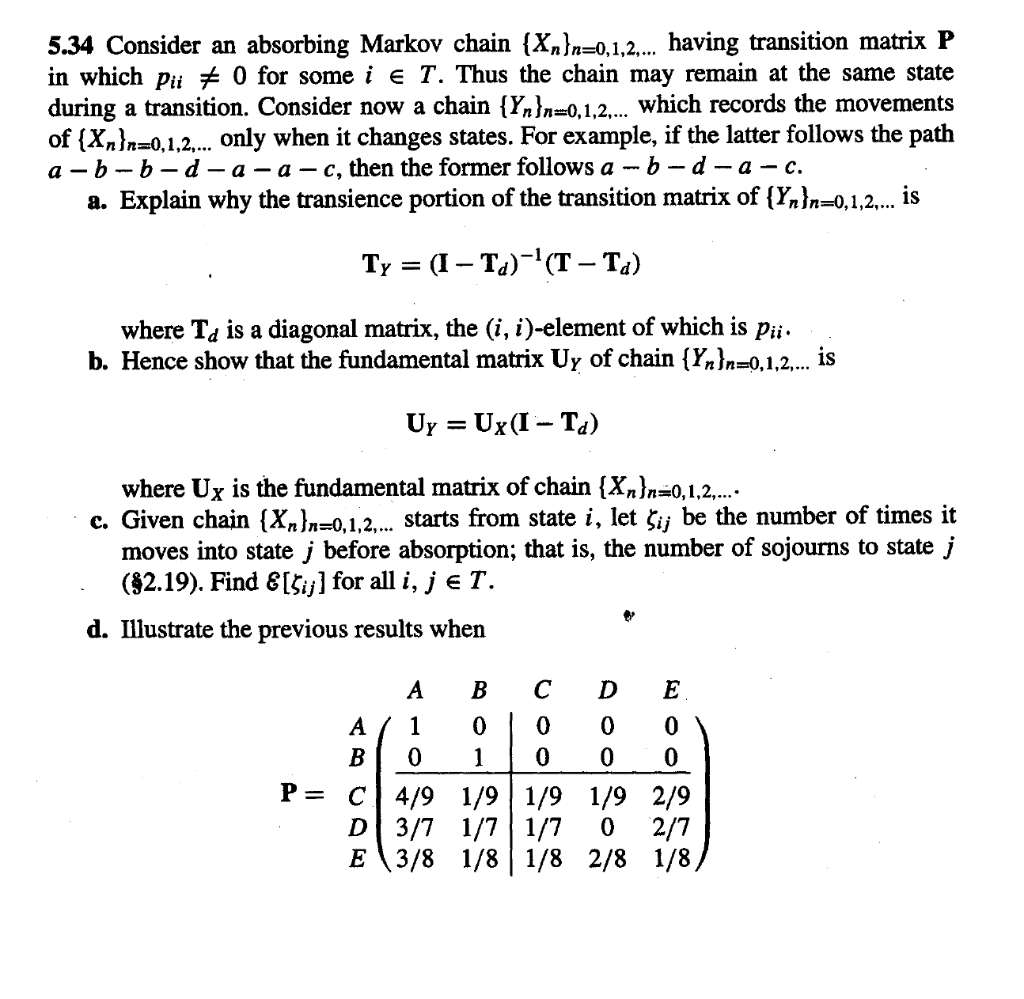

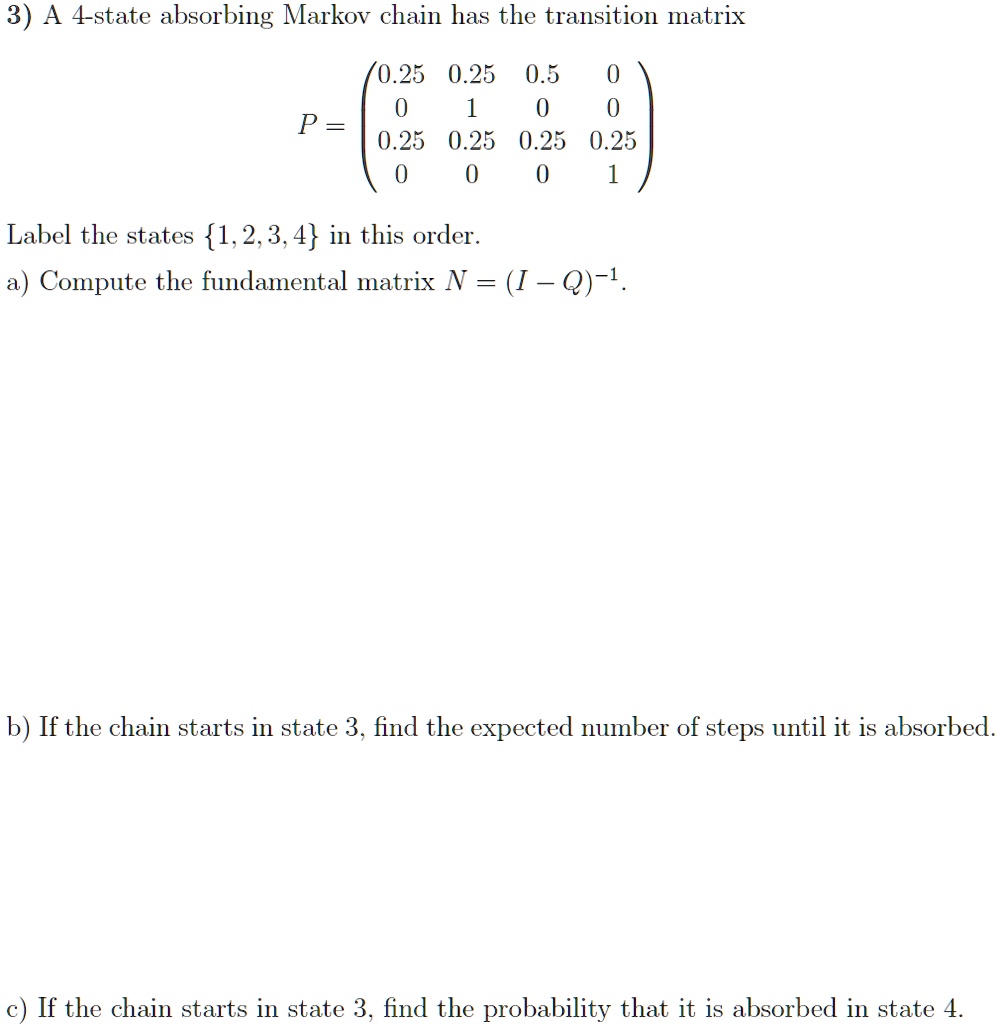

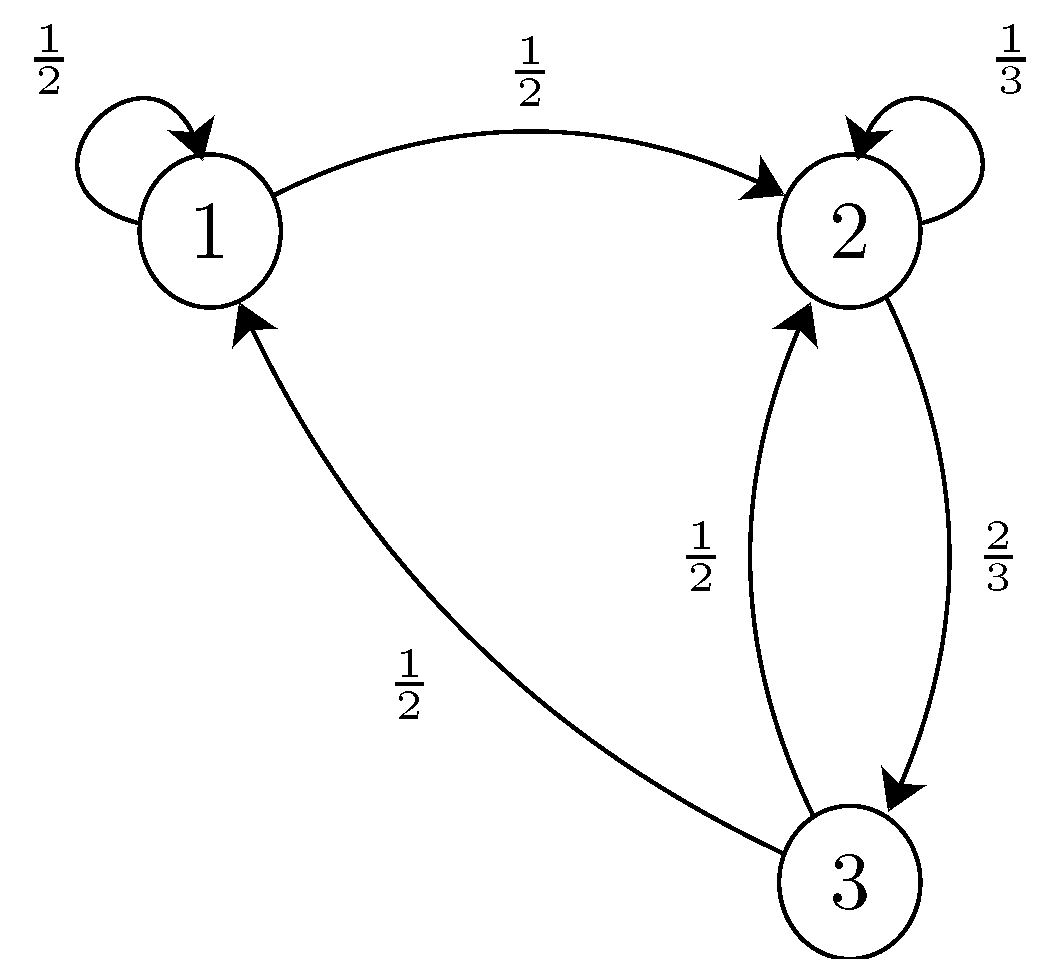

SOLVED: 3) A 4-state absorbing Markov chain has the transition matrix 0.25 0.25 0.5 P = 0.25 0.25 0.25 0.25 Label the states 1,2,3,4 in this order: Compute the fundamental matrix N = (

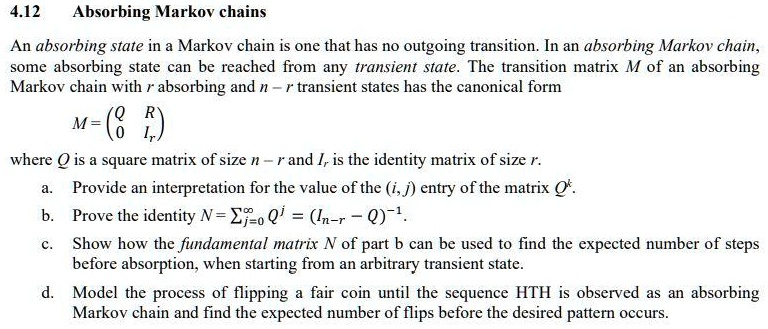

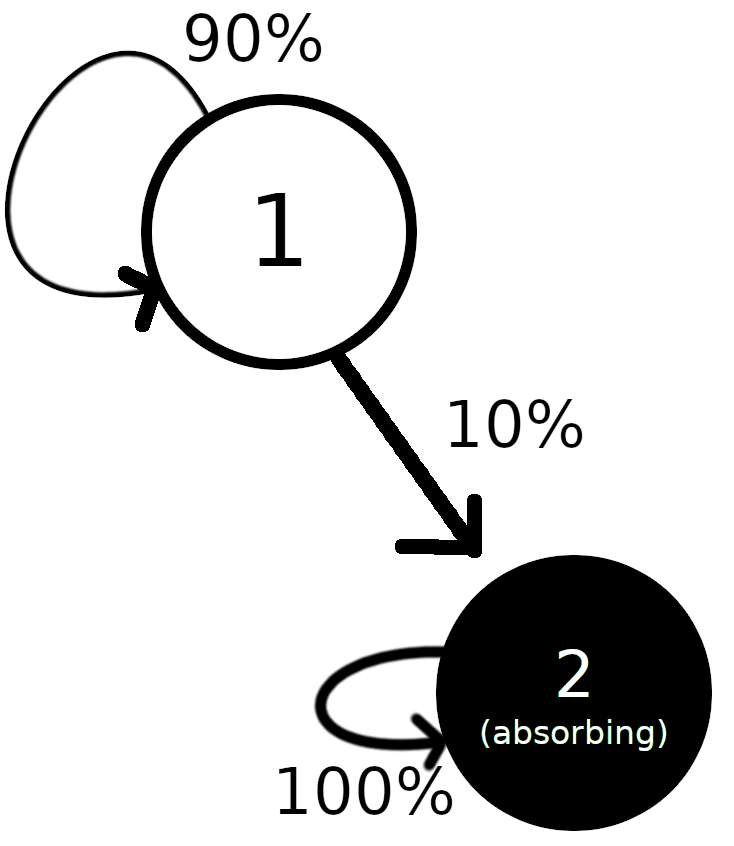

SOLVED: 4.12 Absorbing Markov chains An absorbing state in a Markov chain is one that has no outgoing transition.In an absorbing Markov chain some absorbing state can be reached from any transient

Dynamic risk stratification using Markov chain modelling in patients with chronic heart failure - Kazmi - 2022 - ESC Heart Failure - Wiley Online Library

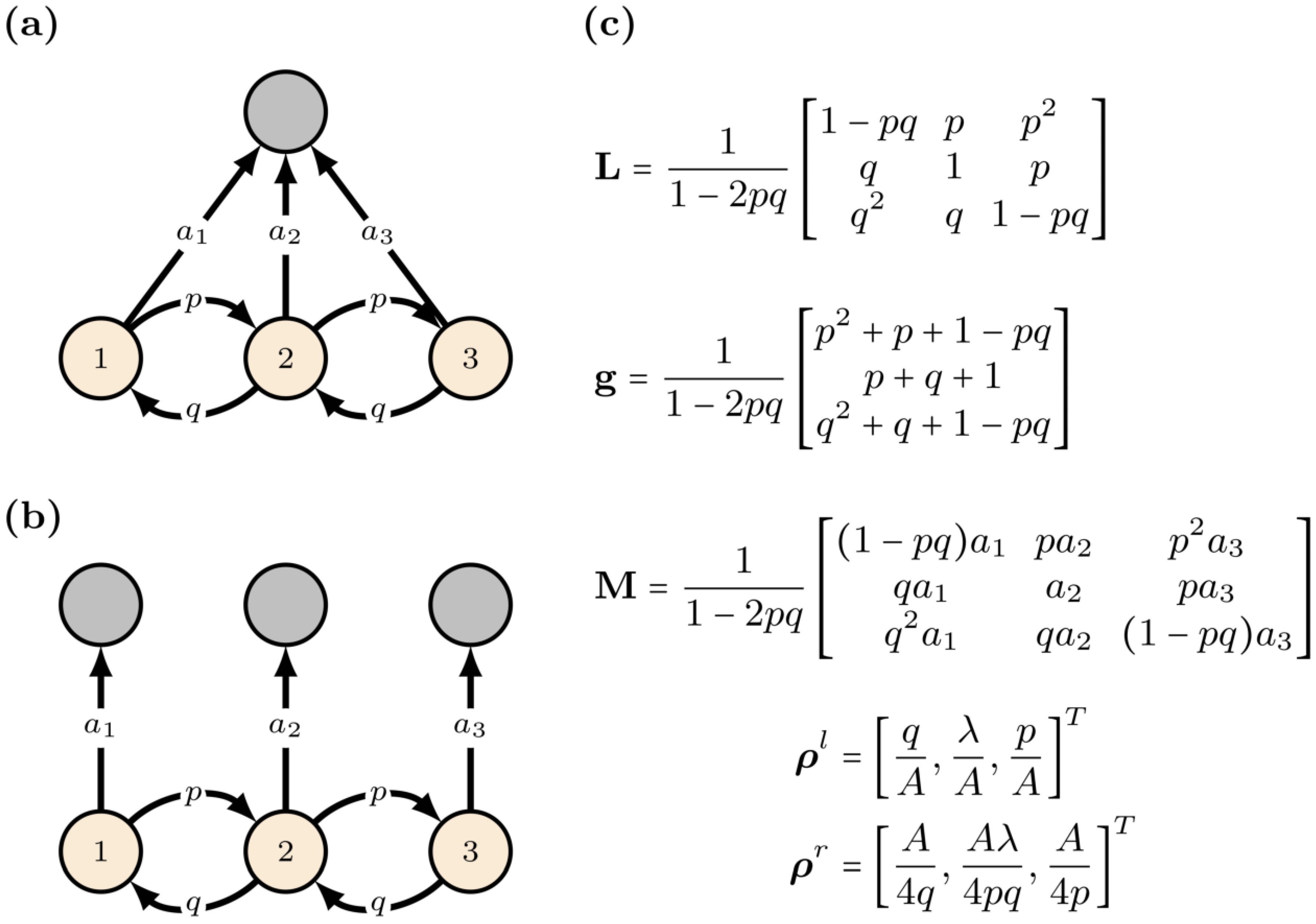

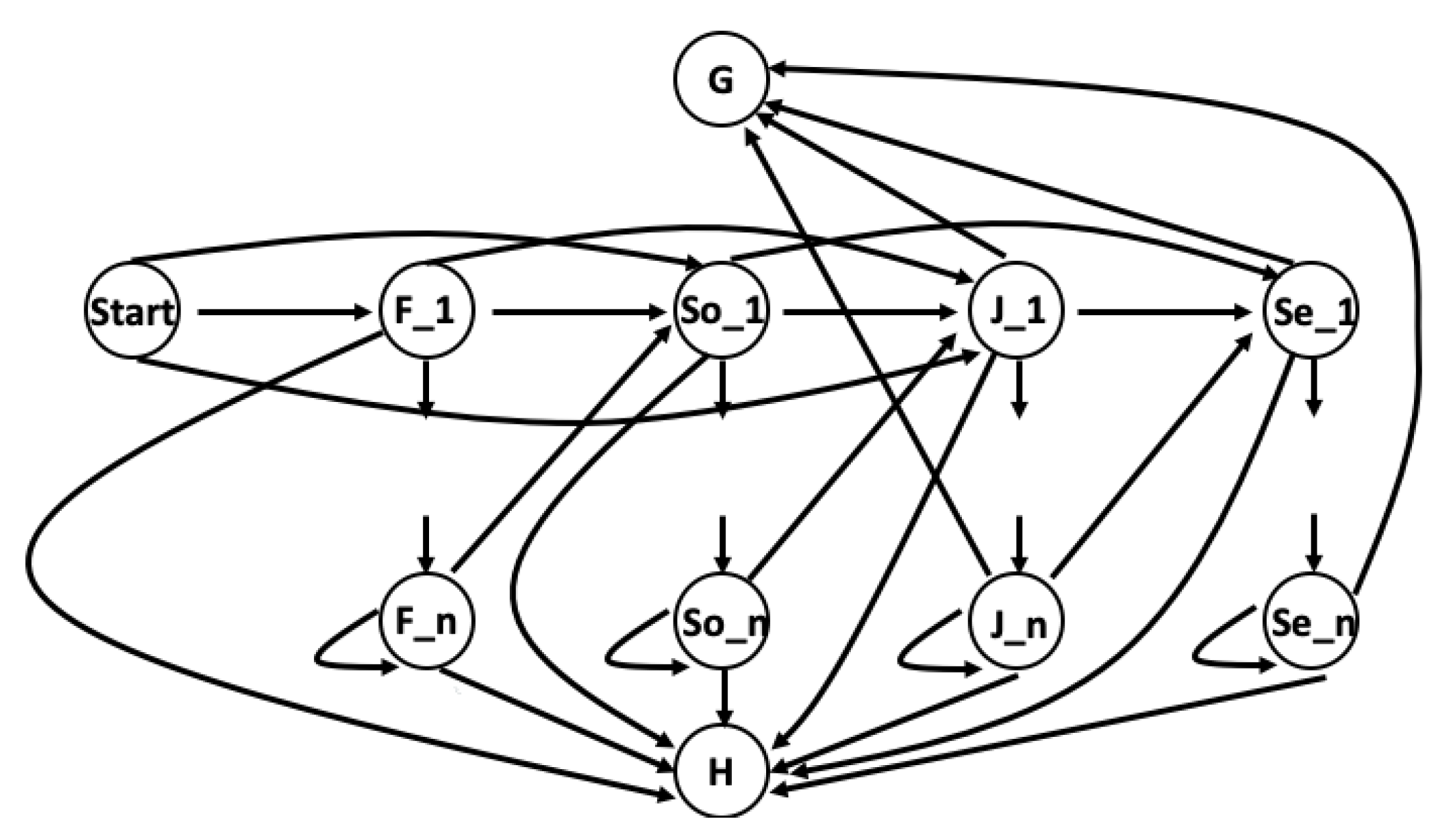

a) The transition graph of an absorbing Markov chain with 4 states,... | Download Scientific Diagram

![PDF] The Engel algorithm for absorbing Markov chains | Semantic Scholar PDF] The Engel algorithm for absorbing Markov chains | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/33ea0eefa3ac1f3b9de12e34e9e680ffba74c3ce/3-Figure1-1.png)